VIM-303

The VIM-303 is a full integrated vision guidance camera designed for pick and place, assembly, and material handling applications.

All In One

Conventional Solutions

Traditional robotic vision solutions require many components from different suppliers, greatly increasing complexity.

VIM-303

Visual Robotics has integrated a 3D camera, 2D camera, powerful vision processing, and robot guidance into the VIM-303, reducing the mechanical and electrical complexity.

Arm Mounted Advantage

Mounting the VIM-303 on the end of a robot arm provides unparalleled field of view and resolution.

Arm mounting increases the effective resolution of our 12MP 2D camera to 53,000 Megapixels!

Traditional overhead systems require rigid mechanical registration and struggle with resolution vs. field-of-view tradeoffs.

Arm-mounted design provides:

A large field of view and high resolution - with a single camera.

Moving closer provides increased resolution. Moving farther yields lower resolution but a larger field of view. Our camera’s field of view extends to the entire reach of the robot.

Moving closer provides increased resolution. Moving farther yields lower resolution but a larger field of view. Our camera’s field of view extends to the entire reach of the robot.

No extra vision hardware, lighting rigs, or gantries.

Vision In Motion

US Patent #12,008,768.

Visual Robotics pioneered the ability of an arm-mounted camera to track still and moving objects even while the camera itself is moving. We call it Vision-in-Motion (VIM).

Our patented VIM technology frees robots from the need to be fixed mounted, enabling practical deployment of portable or mobile robotic workcells.

Objects are tracked visually without the need of conveyor encoders or other equipment.

Our patented VIM technology frees robots from the need to be fixed mounted, enabling practical deployment of portable or mobile robotic workcells.

Objects are tracked visually without the need of conveyor encoders or other equipment.

Semantic Programming

Programs can incorporate visual picking and placement by simply selecting objects by name, while the camera automatically handles all image processing and motion planning. Programming can be performed in Polyscope, Blockly, or Python to suit your needs.

1

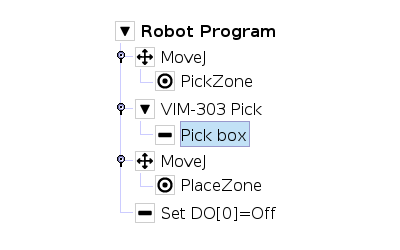

Polyscope

The VIM UR Cap enables simple picking by object name in the Universal Robots native Polyscope programming environment.

2

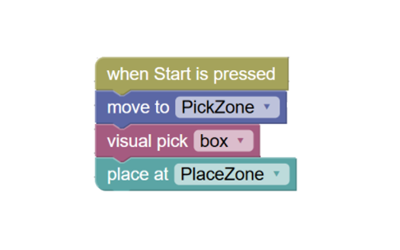

Blockly

Program the robot with visual, block-based coding – no prior robot programming experience needed.

3

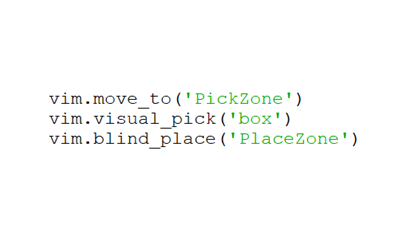

Python

Incorporate visual actions with Python scripting for more complex applications.

Need More

Than A Camera?

If you need more than just a camera for an existing setup, check out our Systems page